3D camera sensors are revolutionizing how we capture and interpret the world around us, pushing the boundaries of traditional photography into dimensions previously unexplored. These sophisticated devices combine advanced depth-sensing technology with precise image capture capabilities to create immersive, three-dimensional representations of scenes and objects.

At the heart of modern 3D imaging lies an intricate dance between light, sensors, and computational power. Unlike conventional cameras that capture flat, two-dimensional images, 3D camera sensors employ multiple techniques – from structured light patterns to time-of-flight measurements – to build detailed depth maps alongside visual data. This breakthrough technology enables everything from facial recognition in smartphones to autonomous vehicle navigation and precise industrial quality control.

The impact of 3D camera sensors extends far beyond traditional photography. In healthcare, they’re enabling non-invasive body scanning and precise surgical navigation. In augmented reality, they’re creating seamless interactions between digital and physical worlds. For creators and innovators, these sensors represent a new frontier in visual storytelling and practical applications, offering unprecedented levels of detail and spatial awareness.

As we stand at the cusp of a new era in imaging technology, understanding 3D camera sensors becomes crucial for anyone involved in photography, technology, or digital innovation. Their potential to transform industries and create new possibilities continues to expand with each technological advancement.

Understanding 3D Camera Sensors

Traditional vs. 3D Sensor Technology

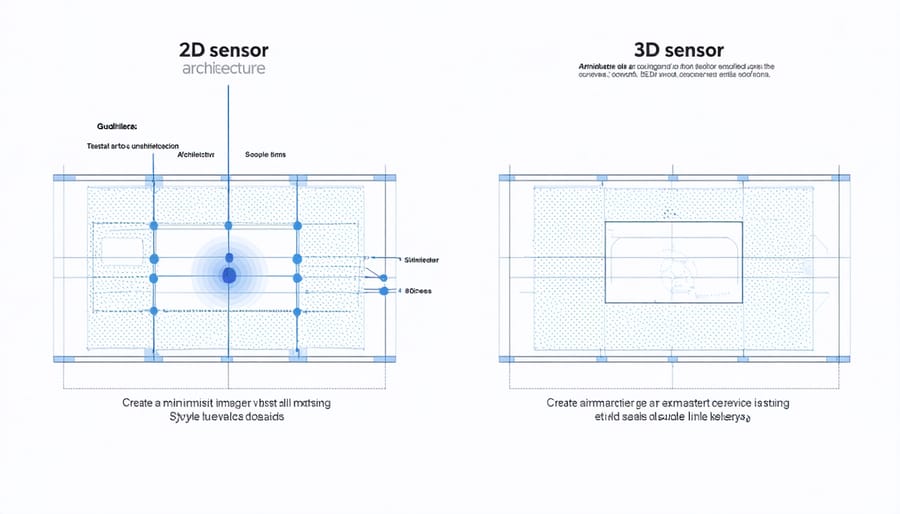

While traditional camera sensor fundamentals rely on capturing light intensity and color information in a two-dimensional plane, 3D sensor technology takes imaging to the next dimension – literally. Traditional sensors capture images by converting light into electrical signals through millions of photodiodes, creating flat representations of scenes. However, they lack the ability to directly measure depth and distance information.

3D sensors, on the other hand, employ various techniques like time-of-flight (ToF), structured light, or stereoscopic vision to capture spatial information. These sensors emit their own light signals and measure how long they take to bounce back, or they project specific patterns to calculate depth. This allows them to create detailed depth maps alongside regular image data.

The key advantage of 3D sensors is their ability to understand the physical space they’re capturing. While traditional sensors might need complex algorithms to estimate depth from 2D images, 3D sensors capture this information directly, enabling more accurate depth perception, better focus capabilities, and enhanced augmented reality experiences.

Depth Sensing Mechanisms

3D camera sensors employ several sophisticated methods to capture depth information, with two primary approaches leading the way: structured light and time-of-flight (ToF) technology. In structured light systems, the sensor projects an invisible infrared pattern onto the scene. By analyzing how this pattern deforms when it hits objects at different distances, the sensor can calculate precise depth measurements.

Time-of-flight sensors work more like radar, sending out light pulses and measuring how long they take to bounce back to the sensor. This method can calculate depth by knowing that light travels at a constant speed, making it particularly effective for real-time applications.

Some advanced systems combine both methods with stereoscopic imaging, similar to how human eyes work. By capturing two slightly offset images and analyzing the differences between them, these sensors can create detailed depth maps. Modern smartphones often use this hybrid approach, enabling features like portrait mode and augmented reality applications.

The accuracy of these mechanisms typically ranges from a few millimeters up to several centimeters, depending on the distance to the subject and the specific technology used.

Quantum Dot Technology: The Game Changer

What Are Quantum Dots?

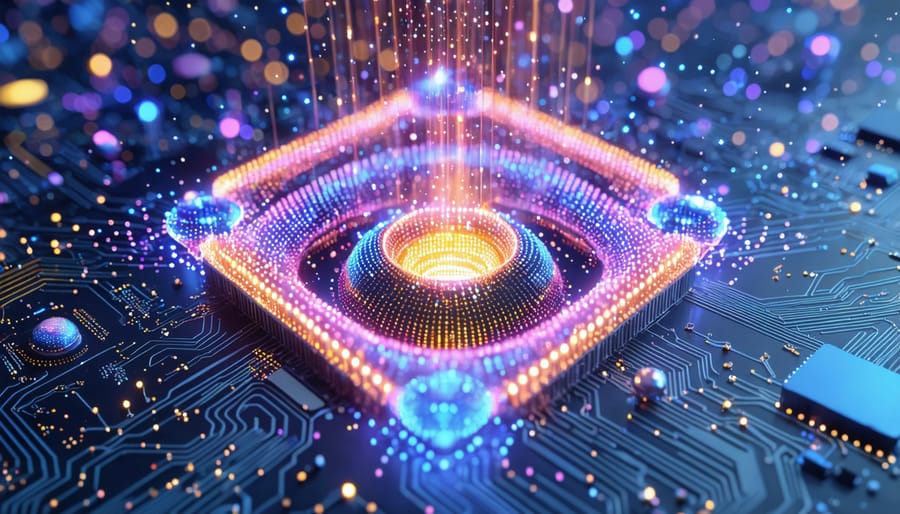

Imagine tiny crystals so small that they’re measured in nanometers – that’s what quantum dots are. These microscopic semiconductors possess remarkable properties that make them revolutionary for camera sensor technology. Just as different sized guitar strings produce different notes, quantum dots of varying sizes respond to different wavelengths of light, creating more precise and vibrant color reproduction in digital imaging.

What makes quantum dots truly special is their ability to convert and amplify light with incredible efficiency. When light hits these nanocrystals, they emit their own light at specific wavelengths, depending on their size. This unique characteristic has led to breakthrough developments in quantum dot image enhancement, particularly in low-light photography.

Think of quantum dots as tiny light amplifiers that can capture and convert photons more effectively than traditional sensor materials. Their size typically ranges from 2 to 10 nanometers, with larger dots producing red light and smaller ones creating blue light. This precise control over light emission makes them ideal for camera sensors, as they can capture more detailed color information while requiring less light to produce clear, vibrant images.

Unlike conventional sensor materials, quantum dots can be fine-tuned during manufacturing to optimize their light-sensing capabilities, offering photographers unprecedented control over image quality and color accuracy.

Integration with 3D Sensors

Quantum dots have revolutionized how 3D sensors capture and process depth information, bringing remarkable improvements to both accuracy and sensitivity. These tiny semiconductor particles, typically just a few nanometers in size, act as highly efficient light collectors and converters, enhancing the capabilities of traditional 3D sensors in several key ways.

When integrated into 3D sensor systems, quantum dots significantly improve the detection of reflected infrared light, which is crucial for depth mapping. Their unique ability to absorb light at one wavelength and emit it at another helps filter out ambient light interference, resulting in cleaner, more precise depth data. This is particularly valuable in challenging lighting conditions where conventional sensors might struggle.

The enhanced sensitivity provided by quantum dots also means 3D sensors can work effectively at greater distances while consuming less power. For photographers and imaging professionals, this translates to more reliable depth mapping in large spaces and better battery life in portable devices.

Real-world applications of this technology include improved facial recognition systems, more accurate autofocus in low light, and enhanced depth effects in portrait photography. The integration has also led to more compact sensor designs, allowing manufacturers to create smaller, more efficient cameras without compromising on performance.

A particularly exciting development is how quantum dots enable better object edge detection, resulting in more natural-looking depth effects and more precise subject separation in computational photography.

Real-World Applications and Benefits

Enhanced Low-Light Performance

One of the most remarkable features of 3D camera sensors is their exceptional performance in challenging lighting conditions. By utilizing advanced pixel architecture and quantum dot technology, these sensors can capture up to three times more light than traditional sensors, making them a game-changer for low-light photography.

The secret lies in the sensor’s ability to detect photons more efficiently. Each pixel well is designed with a deeper structure that can collect more light, while the quantum dots act as highly sensitive light-capturing elements. This combination allows photographers to shoot in dimly lit environments without sacrificing image quality or relying heavily on high ISO settings.

In practical terms, this means cleaner night shots, better indoor photography without flash, and improved performance during golden and blue hours. Wedding photographers, for instance, can capture reception moments with remarkable clarity, while street photographers can work confidently in twilight conditions.

The technology also reduces noise typically associated with low-light shooting, producing images with better color accuracy and sharper details. This enhanced capability means photographers can maintain faster shutter speeds in challenging light, reducing motion blur while keeping ISO levels manageable.

Improved Depth Mapping

3D camera sensors have revolutionized depth mapping, bringing professional-grade portrait photography capabilities to a wider range of devices. By capturing detailed depth information, these sensors create precise separation between subjects and their backgrounds, resulting in more natural-looking bokeh effects that rival those produced by expensive DSLR lenses.

The improved depth mapping enables photographers to make post-capture adjustments to blur levels and focus points, offering unprecedented creative control. Unlike traditional dual-camera systems that rely solely on software algorithms, 3D sensors capture real depth data, leading to more accurate edge detection around complex subjects like hair or transparent objects.

This technology particularly shines in challenging lighting conditions, where conventional portrait modes often struggle. The sensor’s ability to capture true depth information means consistent performance whether you’re shooting in bright sunlight or dim indoor settings. For portrait photographers, this translates to more reliable results and fewer retakes, saving both time and effort during photo sessions.

Professional photographers are increasingly incorporating these sensors into their workflow, especially for events and portrait sessions where quick, high-quality results are essential.

Computational Photography Advantages

The integration of 3D camera sensors has revolutionized modern photography by enabling advanced computational photography capabilities that were previously impossible. One of the most impressive advantages is the ability to capture precise depth information, allowing photographers to adjust focus points after taking the shot. Imagine capturing a portrait and later deciding to shift focus from the subject’s eyes to their hands – that’s now possible with 3D sensor technology.

These sensors excel at scene understanding, creating detailed depth maps that enhance autofocus performance and enable natural-looking bokeh effects. Unlike traditional cameras that simulate background blur through software, 3D sensors capture genuine depth data, resulting in more accurate and pleasing selective focus effects.

Motion tracking is another area where 3D sensors shine, offering improved subject detection and tracking in challenging conditions. This technology has particularly transformed low-light photography, as the sensor can better distinguish subjects from backgrounds even in minimal lighting.

For creative photographers, 3D sensors open up new possibilities in composition and storytelling. The ability to generate accurate 3D models from photographs has applications ranging from architectural visualization to creating immersive virtual reality experiences. Additionally, the enhanced depth perception allows for more precise exposure calculations and better handling of complex lighting situations, resulting in more balanced and natural-looking images.

Future Implications

Next-Generation Camera Features

The future of 3D camera sensor technology promises to revolutionize how we capture and interpret visual information. One of the most exciting developments is the integration of quantum dot technology, which is expected to deliver remarkable image quality improvements even in challenging lighting conditions.

Advanced AI-powered depth mapping is on the horizon, enabling cameras to create incredibly detailed 3D models in real-time. This technology will be particularly valuable for augmented reality applications, allowing for seamless integration between virtual and physical worlds. Photographers can look forward to enhanced autofocus capabilities that can track subjects with unprecedented accuracy, even as they move through complex environments.

Another breakthrough coming to 3D sensors is the implementation of neuromorphic processing, which mimics how the human brain processes visual information. This will result in faster processing speeds and more efficient power consumption, making high-end 3D imaging more accessible in compact cameras and mobile devices.

We’re also seeing the development of hybrid sensors that combine traditional RGB sensing with depth perception in a single unit. This integration will reduce camera bulk while improving overall performance, making professional-grade 3D imaging more portable than ever before. For creative professionals, these advancements mean new possibilities in computational photography, virtual set design, and immersive storytelling.

Impact on Professional Photography

The integration of 3D camera sensors is revolutionizing professional photography, offering photographers unprecedented creative control and technical capabilities. Studio photographers are finding that these sensors enable more precise depth mapping, resulting in superior autofocus performance and more accurate subject isolation for portrait work.

Wedding and event photographers particularly benefit from improved low-light performance, as 3D sensors can capture more detailed spatial information even in challenging lighting conditions. This technology also allows for post-production depth adjustments, giving professionals more flexibility to refine their images after the shoot.

Commercial photographers working in product photography are discovering that 3D sensors provide enhanced detail capture, making it easier to create compelling product images with accurate depth representation. The technology’s ability to capture precise dimensional data is proving invaluable for e-commerce and catalog work.

For architectural photographers, 3D sensors offer improved perspective control and more accurate spatial relationships, resulting in more realistic representations of interior and exterior spaces. The technology also enables better HDR processing by incorporating depth information into exposure calculations.

Perhaps most significantly, 3D sensor technology is opening new revenue streams for professionals. The ability to capture detailed spatial information allows photographers to offer innovative services like virtual tours, 3D modeling, and augmented reality experiences, expanding their business opportunities beyond traditional photography services.

While the learning curve can be steep, many professionals find that mastering 3D sensor technology gives them a competitive edge in an increasingly technical industry.

As we look toward the future of photography, 3D camera sensor technology stands as a transformative force that’s reshaping how we capture and interact with visual content. The integration of advanced sensing capabilities, depth perception, and real-time processing has opened up possibilities that were once confined to science fiction.

The impact of this technology extends far beyond traditional photography. From smartphone cameras that can create perfect portrait shots with accurate depth mapping to professional systems that enable groundbreaking augmented reality experiences, 3D camera sensors are becoming increasingly integral to our visual world.

For photographers, both amateur and professional, this technology represents a new creative frontier. The ability to capture not just light and color, but also depth and spatial information, provides unprecedented control over image composition and post-processing capabilities. This enhanced control allows for more precise adjustments, better subject isolation, and more immersive final results.

Looking ahead, we can expect 3D camera sensor technology to become even more sophisticated and accessible. As manufacturers continue to refine these systems, we’ll likely see improved accuracy, faster processing speeds, and new applications we haven’t yet imagined. The technology’s influence on fields like virtual reality, autonomous vehicles, and medical imaging suggests that its importance will only grow in the coming years.

For those invested in photography’s future, understanding and embracing 3D camera sensor technology isn’t just beneficial – it’s essential. This technology represents not just an evolution in how we capture images, but a revolution in how we perceive and interact with the visual world around us.